Notes to section editors:

- Describe goals, technologies, key features, etc.

- This chapter does not replace your project specific technical documentation

- You may include a reference to your community wiki space.

- Try to avoid references to specific time-dependent aspects of your project that might render this whitepaper obsolete quickly.

- Please keep the length of your section to 1-2 pages.

3.1 FD.IO

Editor: Ole Troan

3.2 ONAP

Editor: Chaker Al-Hakim

(DRAFT)

Introduction

The ONAP project addresses the rising need for a common automation platform for telecommunication, cable, and cloud service providers—and their solution providers—to deliver differentiated network services on demand, profitably and competitively, while leveraging existing investments.

The challenge that ONAP meets is to help operators of telecommunication networks to keep up with the scale and cost of manual changes required to implement new service offerings, from installing new data center equipment to, in some cases, upgrading on-premises customer equipment. Many are seeking to exploit SDN and NFV to improve service velocity, simplify equipment interoperability and integration, and to reduce overall CapEx and OpEx costs. In addition, the current, highly fragmented management landscape makes it difficult to monitor and guarantee service-level agreements (SLAs). These challenges are still very real now as ONAP creates its fourth release.

ONAP is addressing these challenges by developing global and massive scale (multi-site and multi-VIM) automation capabilities for both physical and virtual network elements. It facilitates service agility by supporting data models for rapid service and resource deployment and providing a common set of northbound REST APIs that are open and interoperable, and by supporting model-driven interfaces to the networks. ONAP’s modular and layered nature improves interoperability and simplifies integration, allowing it to support multiple VNF environments by integrating with multiple VIMs, VNFMs, SDN Controllers, as well as legacy equipment (PNF). ONAP’s consolidated xNF requirements publication enables commercial development of ONAP-compliant xNFs. This approach allows network and cloud operators to optimize their physical and virtual infrastructure for cost and performance; at the same time, ONAP’s use of standard models reduces integration and deployment costs of heterogeneous equipment. All this is achieved while minimizing management fragmentation.

The ONAP platform allows end-user organizations and their network/cloud providers to collaboratively instantiate network elements and services in a rapid and dynamic way, together with supporting a closed control loop process that supports real-time response to actionable events. In order to design, engineer, plan, bill and assure these dynamic services, there are three major requirements:

- A robust design framework that allows the specification of the service in all aspects – modeling the resources and relationships that make up the service, specifying the policy rules that guide the service behavior, specifying the applications, analytics and closed control loop events needed for the elastic management of the servic

- An orchestration and control framework (Service Orchestrator and Controllers ) that is recipe/ policy-driven to provide an automated instantiation of the service when needed and managing service demands in an elastic manne

- An analytic framework that closely monitors the service behavior during the service lifecycle based on the specified design, analytics and policies to enable response as required from the control framework, to deal with situations ranging from those that require healing to those that require scaling of the resources to elastically adjust to demand variations.

To achieve this, ONAP decouples the details of specific services and supporting technologies from the common information models, core orchestration platform, and generic management engines (for discovery, provisioning, assurance etc.). Furthermore, it marries the speed and style of a DevOps/NetOps approach with the formal models and processes operators require to introduce new services and technologies. It leverages cloud-native technologies including Kubernetes to manage and rapidly deploy the ONAP platform and related components. This is in stark contrast to traditional OSS/Management software platform architectures, which hardcoded services and technologies, and required lengthy software development and integration cycles to incorporate changes.

The ONAP Platform enables service/resource independent capabilities for design, creation and lifecycle management, in accordance with the following foundational principles:

- Ability to dynamically introduce full service lifecycle orchestration (design ,provisioning and operation) and service API for new services and technologies without the need for new platform software releases or without affecting operations for the existing services

- Carrier-grade scalability including horizontal scaling (linear scale-out) and distribution to support a large number of services and large networks

- Metadata-driven and policy-driven architecture to ensure flexible and automated ways in which capabilities are used and delivered

- The architecture shall enable sourcing best-in-class components

- Common capabilities are ‘developed’ once and ‘used’ many times

- Core capabilities shall support many diverse services and infrastructures

Further, ONAP comes with a functional architecture with component definitions and interfaces, which provides a force of industry alignment in addition to the open source code.

ONAP Architecture

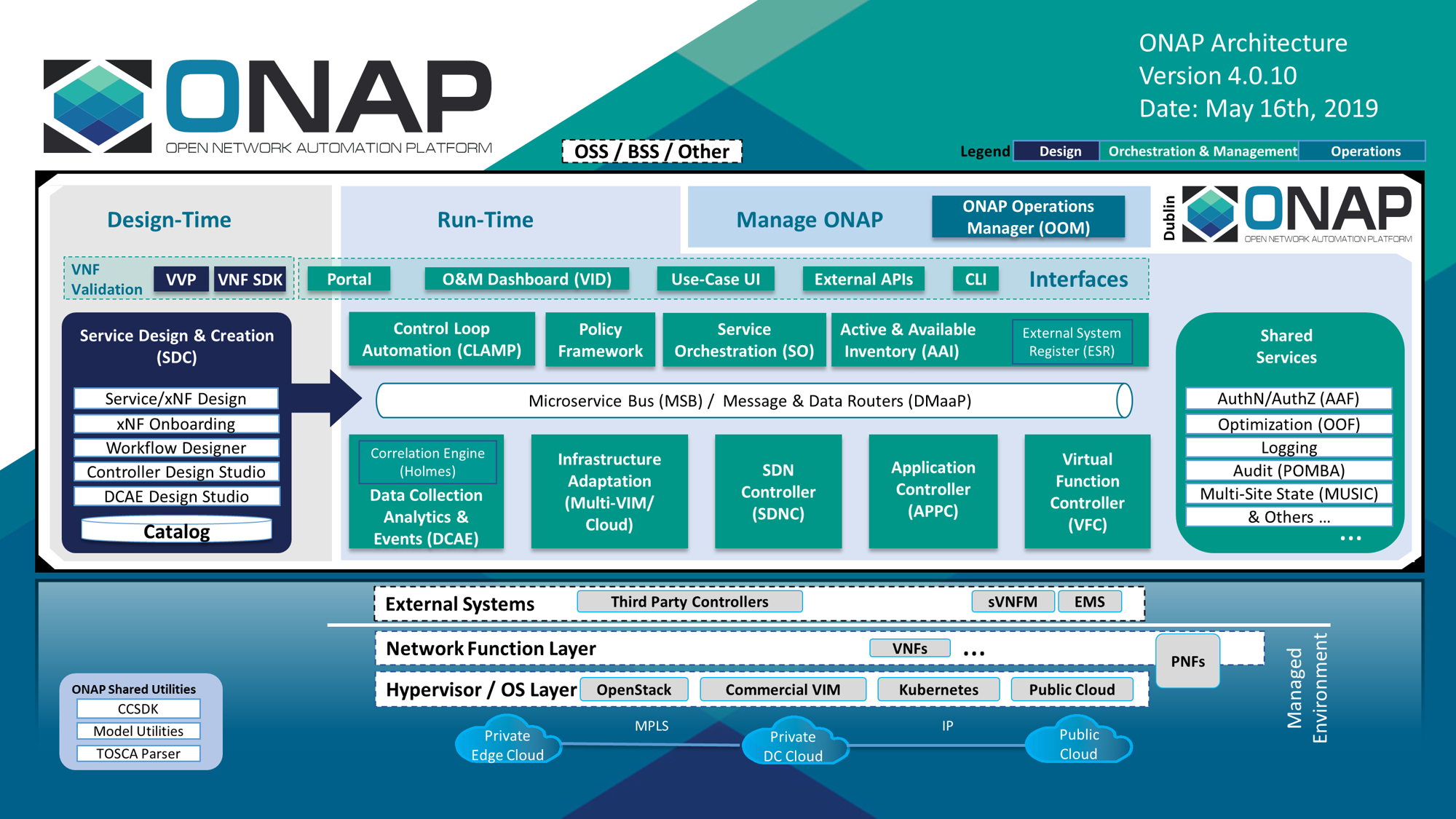

The platform provides common functions such as data collection, control loops, meta-data recipe creation, and policy/recipe distribution that are necessary to construct specific behaviors.

To create a service or operational capability ONAP supports service/ operations-specific service definitions, data collection, analytics, and policies (including recipes for corrective/remedial action) using the ONAP Design Framework Portal.

Figure 1 below provides a high-level view of the ONAP architecture with its microservices-based platform components.

Figure 1

ONAP Functional Architecture

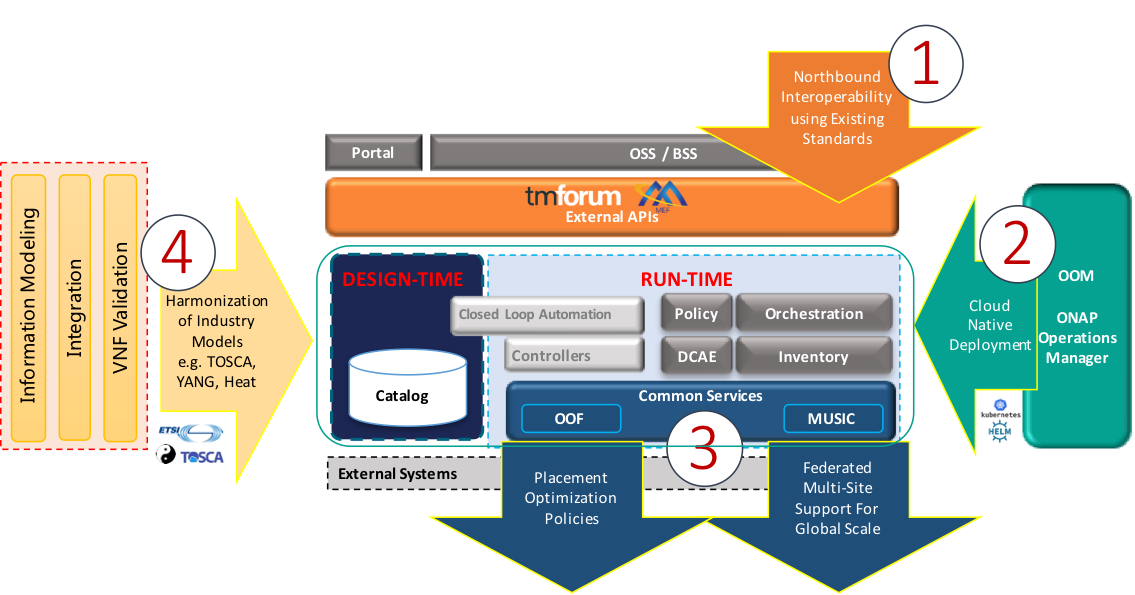

Figure 2 below, provides a simplified functional view of the architecture, which highlights the role of a few key components:

- Design time environment for onboarding services and resources into ONAP and designing required services.

- External API provides northbound interoperability for the ONAP Platform and Multi-VIM/Cloud provides cloud interoperability for the ONAP workloads

- OOM provides the ability to manage cloud-native installation and deployments to Kubernetes-managed cloud environments.

- ONAP Shared Services provides shared capabilities for ONAP modules. MUSIC allows ONAP to scale to multi-site environments to support global scale infrastructure requirements. The ONAP Optimization Framework (OOF) provides a declarative, policy-driven approach for creating and running optimization applications like Homing/Placement, and Change Management Scheduling Optimization. Logging provides centralized logging capabilities, Audit (POMBA) provides capabilities to understand orchestration actions.

- ONAP shared utilities provide utilities for the support of the ONAP components.

- Information Model and framework utilities continue to evolve to harmonize the topology, workflow, and policy models from a number of SDOs including ETSI NFV MANO, TM Forum SID, ONF Core, OASIS TOSCA, IETF, and MEF.

Figure 2

Functional View of the ONAP latest release

The Latest release of ONAP has a number of important new features in the areas of design time and runtime, ONAP installation, and S3P.

- Design time: ONAP has evolved the controller design studio, as part of the controller framework, which enables a model driven approach for how an ONAP controller controls the network resources.

- Runtime: Service Orchestration (SO) and controllers have new functionality to support physical network functions (PNFs), reboot, traffic migration, expanded hardware platform awareness (HPA), cloud agnostic intent capabilities, improved homing service, SDN geographic redundancy, scale-out and edge cloud onboarding. This will expand the actions available to support lifecycle management functionality, increase performance and availability, and unlock new edge automation and 5G use cases. With support for ETSI NFV-SOL003, the introduction of an ETSI compliant VNFM is simplified.

- VES Enhancements: To facilitate VNF vendor integration, ONAP introduced some mapper components that translate specific events (SNMP traps, telemetry, 3 GPP PM) towards ONAP VES standardized events.

- Policy: The Policy project supports multiple policy engines and can distribute policies through policy design capabilities in SDC, simplifying the design process. Next, the Holmes alarm correlation engine continues to support a GUI functionality via scripting to simplify how rapidly alarm correlation rules can be developed.

- External APIs: ONAP northbound API continues to align better with TM Forum APIs (Service Catalog, Service Inventory, Service Order and Hub API) and MEF APIs (around Legato and Interlude APIs) to simplify integration with OSS/BSS. The VID and UUI operations GUI projects can support a larger range of lifecycle management actions through a simple point and click interface allowing operators to perform more tasks with ease.

- Close Loop: The CLAMP project supports a dashboard to view DMaaP and other events during design and runtime to ease the debugging of control-loop automation.

- Service Mesh Support: ONAP has experimentally introduced ISTIO in certain components to progress the introduction of Service Mesh.

- ONAP installation: The ONAP Operations Manager (OOM) continues to make progress in streamlining ONAP installation by using Kubernetes (Docker and Helm Chart technologies). OOM supports pluggable persistent storage including GlusterFS, providing users with more storage options. In a multi-node deployment, OOM allows more control on the placement of services based on available resources or node selectors. Finally, OOM now supports backup/restore of an entire k8s deployment thus introducing data protection.

- Deployability: Dublin continued the 7 Dimensions momentum (Stability, Security, Scalability, Performance; and Resilience, Manageability, and Usability) from the prior to the Beijing release. A new logging project initiative called Post Orchestration Model Based Audit (POMBA), can check for deviations between design and ops environments thus increasing network service reliability. Numerous other projects ranging from Logging, SO, VF-C, A&AI, Portal, Policy, CLAMP and MSB have a number of improvements in the areas of performance, availability, logging, move to a cloud-native architecture, authentication, stability, security, and code quality. Finally, versions of OpenDaylight and Kafka that are integrated into ONAP were upgraded to the Oxygen and v0.11 releases providing new capabilities such as P4 and data routing respectively.

3.3 Open Daylight

Editor: Abhijit Kumbhare

Introduction

OpenDaylight (ODL) is a modular open platform for customizing and automating networks of any size and scale. The OpenDaylight Project arose out of the SDN movement, with a clear focus on network programmability. It was designed from the outset as a foundation for commercial solutions that address a variety of use cases in existing network environments.

OpenDaylight Architecture

Model-Driven

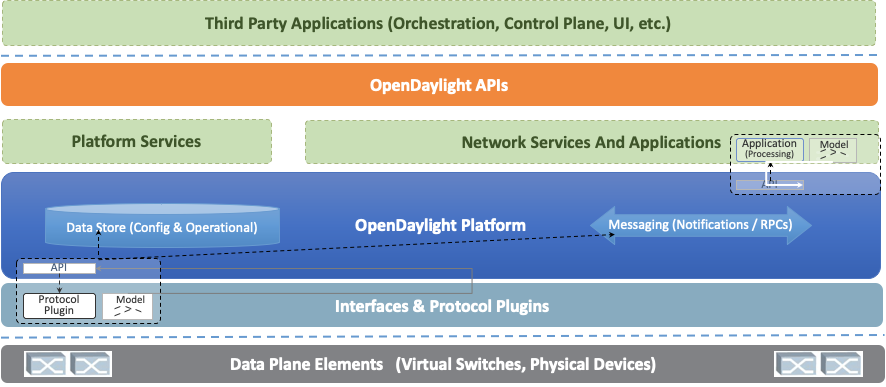

The core of the OpenDaylight platform is the Model-Driven Service Abstraction Layer (MD-SAL). In OpenDaylight, underlying network devices and network applications are all represented as objects, or models, whose interactions are processed within the SAL.

The SAL is a data exchange and adaptation mechanism between YANG models representing network devices and applications. The YANG models provide generalized descriptions of a device or application’s capabilities without requiring either to know the specific implementation details of the other. Within the SAL, models are simply defined by their respective roles in a given interaction. A “producer” model implements an API and provides the API’s data; a “consumer” model uses the API and consumes the API’s data. While ‘northbound’ and ‘southbound’ provide a network engineer’s view of the SAL, ‘consumer’ and ‘producer’ are more accurate descriptions of interactions within the SAL. For example, protocol plugin and its associated model can either be a producer of information about the underlying network, or a consumer of application instructions it receives via the SAL.

The SAL matches producers and consumers from its data stores and exchanges information. A consumer can find a provider that it’s interested in. A producer can generate notifications; a consumer can receive notifications and issue RPCs to get data from providers. A producer can insert data into SAL’s storage; a consumer can read data from SAL’s storage. A producer implements an API and provides the API’s data; a consumer uses the API and consumes the API’s data.

Modular and Multiprotocol

The ODL platform is designed to allow downstream users and solution providers maximum flexibility in building a controller to fit their needs. The modular design of the ODL platform allows anyone in the ODL ecosystem to leverage services created by others; to write and incorporate their own; and to share their work with others. ODL includes support for the broadest set of protocols in any SDN platform – OpenFlow, OVSDB, NETCONF, BGP and many more – that improve programmability of modern networks and solve a range of user needs.

Southbound protocols and control plane services, anchored by the MD-SAL, can be individually selected or written, and packaged together according to the requirements of a given use case. A controller package is built around four key components (odlparent, controller, MD-SAL and yangtools). To this, the solution developer adds a relevant group of southbound protocols plugins, most or all of the standard control plane functions, and some select number of embedded and external controller applications, managed by policy.

Each of these components is isolated as a Karaf feature, to ensure that new work doesn’t interfere with mature, tested code. OpenDaylight uses OSGi and Maven to build a package that manages these Karaf features and their interactions.

This modular framework allows developers and users to:

- Only install the protocols and services they need

- Combine multiple services and protocols to solve more complex problems as needs arise

- Incrementally and collaboratively evolve the capabilities of the open source platform

- Quickly develop custom, value-added features for highly specialized use cases, leveraging a common platform shared across the industry.

Bottomline about the Architecture

The modularity and flexibility of OpenDaylight allows end users to select whichever features matter to them and to create controllers that meets their individual needs.

Use Cases

As we saw, the OpenDaylight platform (ODL) provides a flexible common platform underpinning a wide variety of applications and Use Cases. Some of the most common use cases are mentioned here - but OpenDaylight platform can be used in several other use cases not mentioned here.

ONAP

The SDN Controller (SDNC) and the Application Controller (APPC) components of ONAP are based on OpenDaylight.

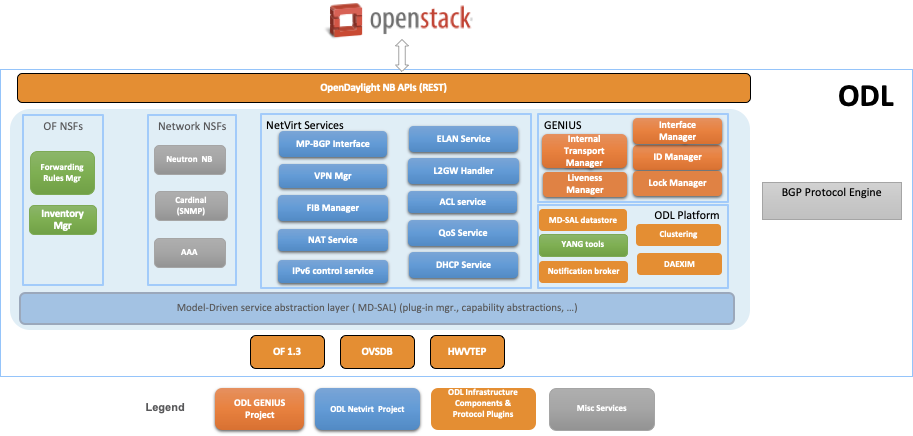

Network Virtualization for Cloud and NFV

OpenDaylight NetVirt App can be used to provide network virtualization (overlay connectivity) inside and between data centers for Cloud SDN use case:

- VxLAN within the data center

- L3 VPN across data centers

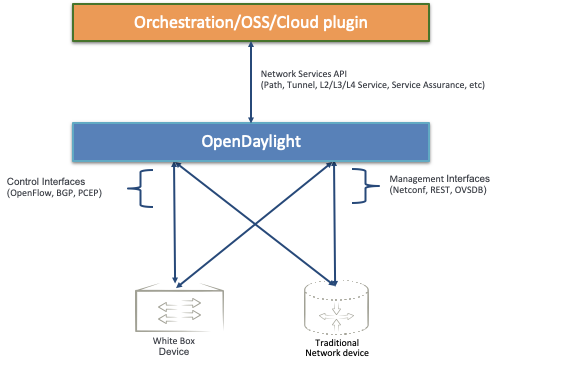

The components used to provide Network Virtualization is shown in the diagram below:

Network Abstraction

OpenDaylight can expose Network Services API for northbound applications for Network Automation in a multi vendor network.

3.4 Open Switch

Editor: Mike Lazar

Link: OpenSwitch

3.5 OPNFV and CNTT

Editor: Rabi Abdel

3.6 PNDA

Editor: Donald Hunter

Introduction

Innovation in the big data space is extremely rapid, but composing the multitude of technologies together into an end-to-end solution can be extremely complex and time-consuming. The vision of PNDA is to remove this complexity, and allow you to focus on your solution instead. PNDA is an integrated big data platform for the networking world, curated from the best of the Hadoop ecosystem. PNDA brings together a number of open source technologies to provide a simple, scalable, open big data analytics platform that is capable of storing and processing data from modern large-scale networks. It supports a range of applications for networks and services covering both the Operational Intelligence (OSS) and Business intelligence (BSS) domains. PNDA also includes components that aid in the operational management and application development for the platform itself.

The current focus of the PNDA project is to deliver a fully cloud native PNDA data platform on Kubernetes. Our focus this year has been migrating to a containerised and helm orchestrated set of components, which has simplified PNDA development and deployment as well as lowering our project maintenance cost. Our current goal with the Cloud-native PNDA project is to deliver the PNDA big data experience on Kubernetes in the first half of 2020.

PNDA provides the tools and capabilities to:

- Aggregate data like logs, metrics and network telemetry

- Scale up to consume millions of messages per second

- Efficiently distribute data with publish and subscribe model

- Process bulk data in batches, or streaming data in real-time

- Manage lifecycle of applications that process and analyze data

- Let you explore data using interactive notebooks

PNDA Architecture

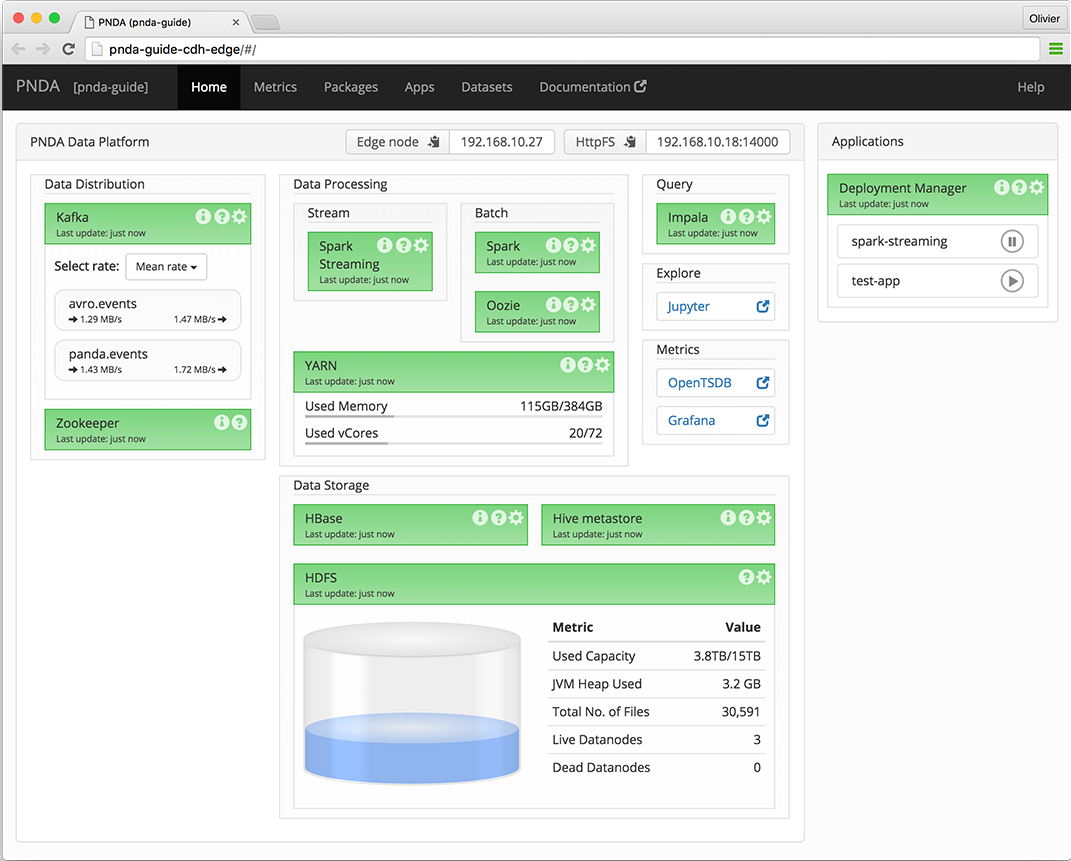

PNDA Operational View

The PNDA dashboard provides an overview of the health of the PNDA components and all applications running on the PNDA platform. The health report includes active data path testing that verifies successful ingress, storage, query and batch consumption of live data.

3.7 SNAS

Editor: <TBD>

3.8 Tungsten Fabric

Editor: Prabhjot Singh Sethi

Introduction:

Tungsten Fabric provides a highly scalable virtual networking platform that works with a variety of virtual machine and container orchestrators, integrating them with physical networking and compute infrastructure. It is designed to support multi-tenant networks in the largest environments while supporting multiple orchestrators simultaneously.

Tungsten Fabric enables usage of same controller and forwarding components for every deployment, providing a consistent interface for managing connectivity in all the environments it supports, and is able to provide seamless connectivity between workloads managed by different orchestrators, whether virtual machines or containers, and to destinations in external networks.

Architecture Overview:

Tungsten Fabric controller integrates with cloud management systems such as OpenStack or Kubernetes. Its function is to ensure that when a virtual machine (VM) or container is created, it is provided with network connectivity according to the network and security policies specified in the controller or orchestrator.

Tungsten Fabric consists of two primary pieces of software

- Tungsten Fabric Controller– a set of software services that maintains a model of networks and network policies, typically running on several servers for high availability

- Tungsten Fabric vRouter– installed in each host that runs workloads (virtual machines or containers), the vRouter performings packet forwarding and enforces network and security policies.

Technologies used:

Tungsten Fabric uses networking industry standards such as BGP EVPN control plane and VXLAN, MPLSoGRE and MPLSoUDP overlays to seamlessly connect workloads in different orchestrator domains. E.g. Virtual machines managed by VMware vCenter and containers managed by Kubernetes.

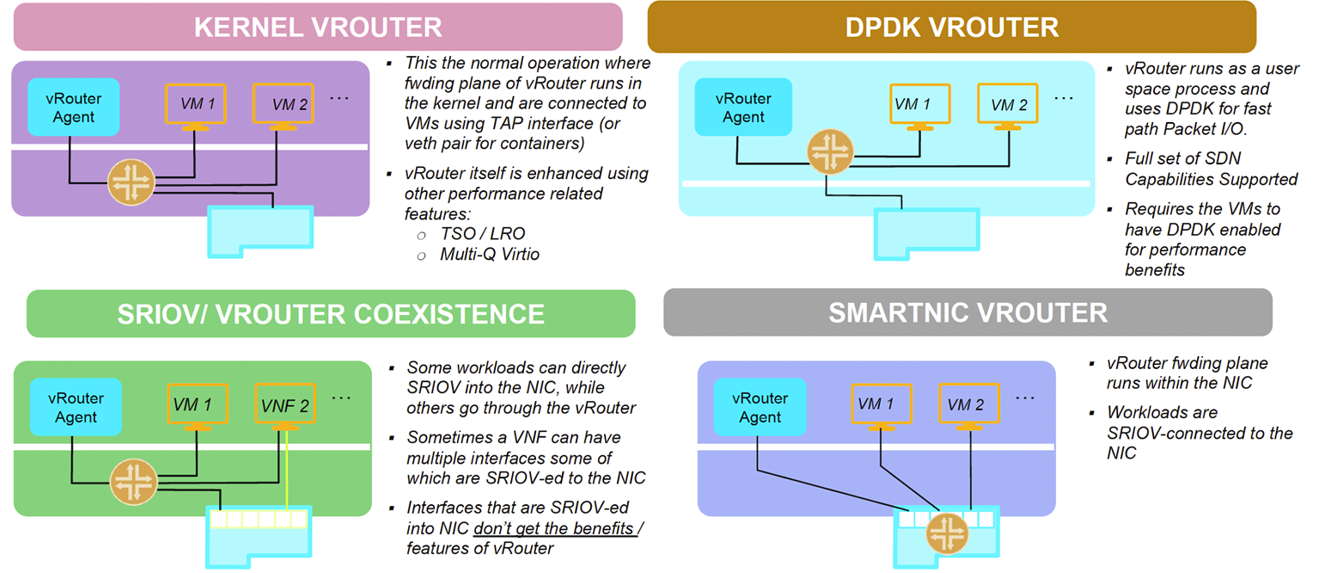

Tungsten Fabric supports four modes of datapath operation:

Tungsten Fabric connects virtual networks to physical networks:

- Using gateway routers with BGP peering

- Using ToR with OVSDB

- Using ToR managed with Netconf and BGP-EVPN peering

- Directly through datacenter underlay network (Provider networks)

Key Features:

Tungsten Fabric manages and implements virtual networking in cloud environments using OpenStack and Kubernetes orchestrators. Where it uses overlay networks between vRouters that run on each host. It is built on proven, standards-based networking technologies that today support the wide-area networks of the world’s major service providers, but repurposed to work with virtualized workloads and cloud automation in data centers that can range from large scale enterprise data centers to much smaller telco POPs. It provides many enhanced features over the native networking implementations of orchestrators, including:

- Highly scalable, multi-tenant networking

- Multi-tenant IP address management

- DHCP, ARP proxies to avoid flooding into networks

- Efficient edge replication for broadcast and multicast traffic

- Local, per-tenant DNS resolution

- Distributed firewall with access control lists

- Application-based security policies based on tags

- Distributed load balancing across hosts

- Network address translation (1:1 floating IPs and distributed SNAT)

- Service chaining with virtual network functions

- Dual stack IPv4 and IPv6

- BGP peering with gateway routers

- BGP as a Service (BGPaaS) for distribution of routes between privately managed customer networks and service provider networks

- Integration with VMware orchestration stack