All members are encouraged to contribute directly into this working draft. See also the OVP 2.0 Technical Ideas page

Charter

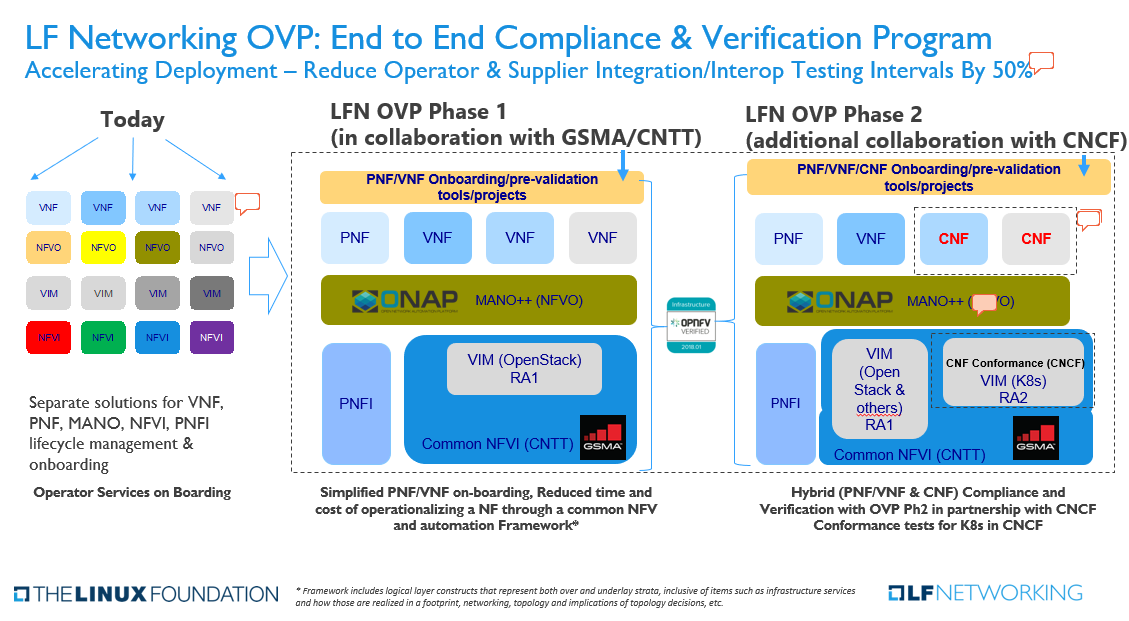

The LFN OPNFV Verification Program Phase 2 (OVP 2.0) is an open source, community-led compliance and verification program to promote, enable, and evolve a thriving ecosystem of cloud-native telecoms where Cloud Native Network Functions (CNFs) from different vendors can inter-operate and run on the same immutable infrastructure. It includes CNF compliance and verification testing based on requirements and best practices put forth by both the CNCF and CNTT. These requirements feed tool-sets and testing scripts developed within OPNFV, ONAP and CNCF communities.

Vision

An end to end set of compliance/conformance tests and testing toolchain for independently verified Cloud Native Functions for use in Telco environments.

Who are participating?

Linux Foundation Networking

- Open NFVI project (OPNFV)

- Open Networking Automation Project (ONAP)

- Compliance and Verification Committee (CVC)

Common NFVI Telco Taskforce (CNTT)

Cloud Native Computing Foundation (CNCF)

- Telecom User Group (TUG)

- CNF Conformance

5G Cloud Native Network Demo (Brandon Wick)

How to engage

- Join the mailing list: https://lists.opnfv.org/g/OVP-p2

- Subscribe to the calendar for the meetings: https://lists.opnfv.org/g/OVP-p2/ics/7337234/1823902251/feed.ics

- Engage in the discussions at the weekly meetings

- Share your ideas on these wiki pages and comment sections at the bottom of every page

What is the Minimum Viable Program

The end state vision is spelled out above and since it is likely a multi-year endeavor, what can we do this year to chart the direction and set in motion the program that achieves the vision?

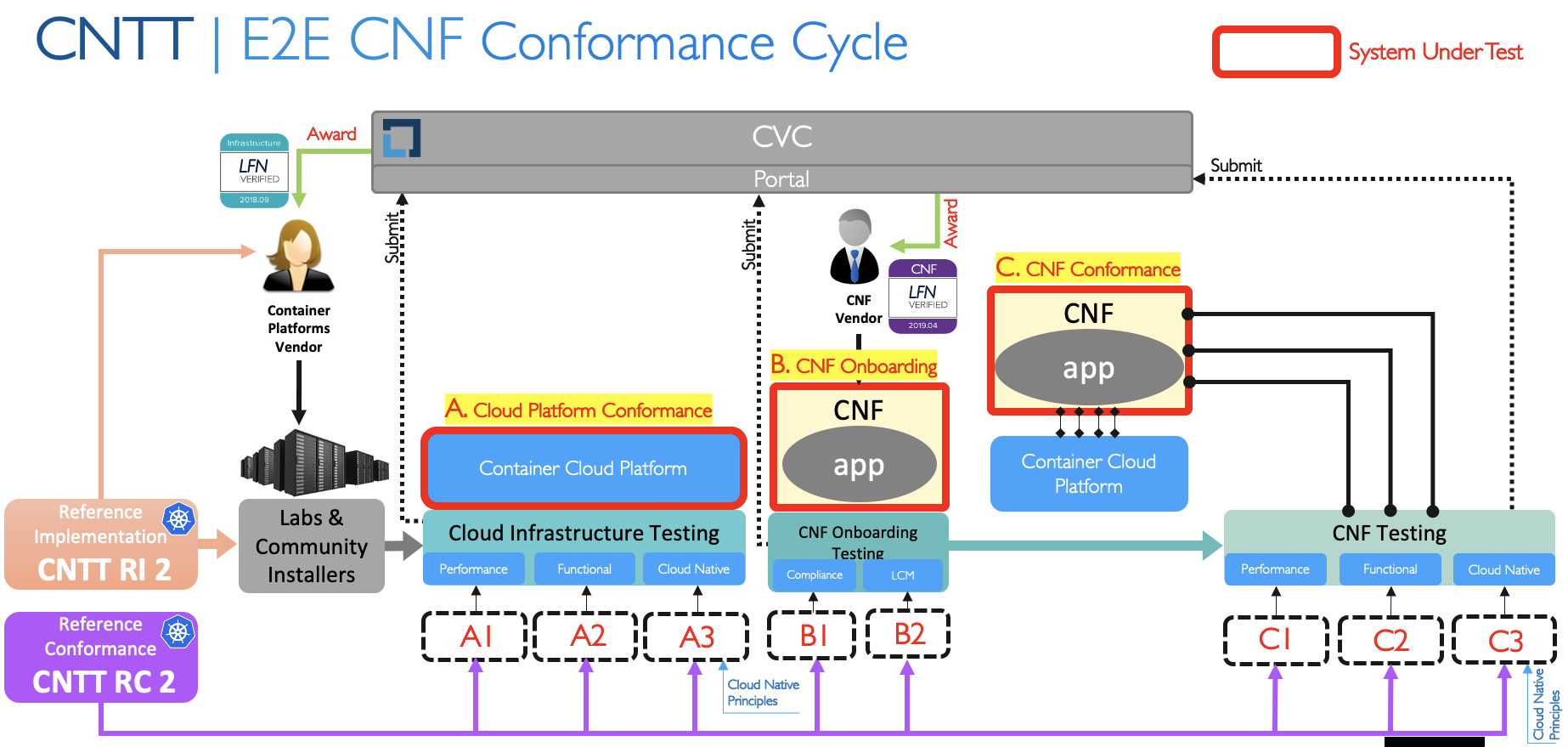

First, the envisioned process is diagrammed below:

E2E CNF Conformance Cycle

In the below diagram the “?” indicate processes that need definition and refinement for the differing execution environment of OVP Phase 2.

Categories of Conformance:

- A. Cloud Platform Conformance

- A1. Functional (i.e. CNTT RA-2 Compliance)

- B. CNF Conformance

- B1. Cloud Native (i.e. CNF Conformance)

- B2. Functional (i.e. CNTT RA-2 Compatibility)

- B3. ONAP

- B4. Performance

- C. Cloud Platform Benchmarking

MVP proposal

Not every thing can be tackled in the MVP, here are two elements that can be tackled for MVP.

- A1: Cloud Platform Conformance (may be partial)

- B1 & B2: CNF Compliance

What needs to happen/Decided on:

- Decide on Tooling:

- Cloud installation tools into a given lab.

- Basic Testing Tools.

- what open source project is responsible of this.

- Decide on Lab:

- Community development resource access

- Platform details - specific HW details, login info,etc.

- what open source project is responsible of this.

- Decide on Badging Process:

- Test results review process

- Portal and badging process

- Decide on OpenSource Testing Projects

- What project will deliver what test category (A, B, and C)

- A: ?

- B: ?

- C: ?

- What project will deliver what test category (A, B, and C)

Workstreams Proposal (see OVP 2.0 Workstreams)

- WS01: Governance/Structure/Mktg, Badging Process and Framework[Primary Owner: CVC] Rabi Abdel Lincoln Lavoie Heather Kirksey

- WS02: Definition Requirements Activities (CNTT/ONAP/SDOs) [Primary Owner: CNTT] Bill Mulligan Trevor Lovett Ryan Hallahan Olivier Smith Fernando Oliveira

- WS03: Lab and tooling [Primary Owner ONAP+OPNFV+CNCF] Frederick Kautz Kanagaraj Manickam Trevor Cooper Ryan Hallahan

- WS04: A. Cloud Platform Conformance [Primary Owner XX] Taylor Carpenter (cloud native)

- WS05: B3. CNF Compliance - ONAP Kanagaraj Manickam Catherine Lefevre Amar Kapadia Seshu Kumar Mudiganti Ranny Haiby Srinivasa AddepalliFernando Oliveira Byung-Woo Jun

- WS06: B1/B2. CNF Compliance - Cloud Native & Functional [Primary Owner CNCF Taylor Carpenter ] Olivier Smith Trevor Lovett

WS07: ONAP POC and existing development work [Primary Owner ONAP ]Merged with WS05- WS08: B4/C - Performance: Trevor Cooper

MVP Roadmap - NEEDS COMMUNITY REVIEW/UPDATE

- Define MVP - by April 1

- Validate approach with partners (CNTT, CNCF, LFN projects) - during weekly OVP p2 meetings <ADD LINK>

- Implement “HelloWorld” sampleCNF test - Hackfest at June Developer and Testing Forum

- Implement MVP - by Q1/Q2 2021

Immediate tasks and AIs:

- Identify cloud infrastructure installation tools.

- Identify Labs for testing and human resources who will manage the labs (in addition to the testers)

- Identify projects to develop test scripts and tools to perform Cloud infrastructure functional testing and agree on scope.

- Agree on scope of CNF Onboarding testing.

- Identify projects to develop test scripts and tools to perform CNF testing and agree on scope.

- Agree on CVC portal.

OLD: Workstreams Proposal

Development/Lab environment [Primary Owner OPNFV+CNTT] - What are the lab resources for hosting configurations for developing the NVFI and running on-going CI/CD verification tests? How can a “Lab as a Service” (LaaS) be instantiated for CNF/NFVI testing, development, and validation efforts? Does the CNCF TestBed meet the needs?

Test Tooling/Test Suite Development Based on Above Categories [Primary Owner: OPNFV]: Understand dependencies and what can be parallel processed. Also, what is the overall program test framework (e.g., Dovetail or something similar) that can plug in tests from projects and communities….

- On-boarding/Lifecycle Management testing - What are the preferred tools/methodologies for repeatably creating an NFVI environment supporting containers? Can CNFs be deployed and respond to basic lifecycle events?

- Platform test - What is the source for the tests and toolchains for developing and verifying the NFVI platform for container based CNFs? Are there existing CNCF infrastructure tests/standards that should be co-opted? What level of micro-services should be integrated into the standard platform? How do we validate each of the micro-services against a specific version of the platform?

- CNF test - What is the source for the tests and toolchains for developing and verifying the CNFs as the SUT atop a verified NFVI? How does the CNCF test bed integrate into the OVPp2 workflow and test process? With many micro-services as “helper functions”, how do we validate each of the micro-services against a specific version of the CNF?

- Performance testing - Both the infrastructure and CNF performance characterization would be useful - this is very difficult to cooperatively define/execute (DEFER)

CVC portal [Primary Owner: CVC] - Define the UI for consumers of the CVC and on-ramp for producers of NFVIs and CNFs to publish their successful validation process results? How to the CNF compliance tests (CNCF) figure into the telco focused OVPp2 process?

Governance/Structure/Mktg Framework [Primary Owner: CVC] - Includes 3rd party labs, white papers, slide decks. With input from MAC

8 Comments

Catherine Lefevre

Dear all,

The ONAP TSC CNCF Task Foce (onap-cnf-taskforce@lists.onap.org) would like to suggest some updates regarding "OVP2.0 E2E Compliance and Verification Program" slide.

We have also added some comments and questions.

VNF-CNF OVP - 2 key slides_V5

Olivier Smith

Catherine, the slides you attached seem to be the same that are already posted on the page. Also, what are the questions and comments that you have added? If we move them into the page we can discuss these on our next meeting. It will just be easier for everyone to see.

Catherine Lefevre

Olivier Smith , the second slide is different:

#1 CNF Conformance has been removed from the CNF boxes in alignment with the CNF Conformance definition - https://github.com/cncf/cnf-conformance

#2 VIM (Openstack) and VIM (K8S) are part of CNTT NFVI boxes in alignment with https://github.com/cntt-n/CNTT/tree/master/doc/ref_arch

#3 NFVi, PFNi → NFVI, PFNI – spelling aligned with ETSI

#4 Remove "for CNFs and" from "Hybrid (PNF/VNF & CNF) Compliance and Verification with OVP Ph2 in partnership with CNCF Conformance tests

for CNFs andfor K8s in CNCF'Additional changes to be made:

#5 Picture under "Today" should include PNF, not only VNF

Questions:

#6 Reduce Testing Intervals by 50% - How "50%" have been determined?

#7 MANO++ - is it to confirm that ONAP is Mano++ meaning that ONAP is providing additional capabilities than Mano functions i.e. Control Loops, etc.?

Olivier Smith

Catherine Lefevre -ok, thanks. I've moved the updated slide, comments, and questions into the main page. I just think that it will facilitate a better discussion on our next meeting.

Trevor Lovett

Based on our experience rolling out the initial VNF testing in ONAP and initial VNF badges, we think there are some good lessons learned that should be carried forward into this effort. We’d like to share some standards and principles that could be considered for adoption in the MVP phase that will better position us as the various platforms, standards, and requirements become more mature over time.

We feel it's critical that all tests tie back to a clear "source of truth” that they are validating against. This means we need alignment on which projects and document/artifacts are defining the requirements, and those requirements adhere to certain standards. Likewise, the tests themselves need to have clear linkage to the requirements. At this stage, this is understandably a bit messy as there are parallel groups and communities working on overlapping initiatives, which is a good problem to have.

With those thoughts in mind, we think a central tenant of the MVP would be to establish some criteria and principles to be cared for during the MVP phase. Obviously these are starting position and open for discussion.

We feel it is critical to establish these principles early in the program or it will be difficult to synchronize the requirements and tests as we progress. It will also make it difficult to understand where and how to contribute without such principles.

In the specific MVP categories, we suggest the following refinements to the initial scope to ensure we have a better chance of delivering on the stated objectives:

Taylor Carpenter

Trevor Lovett, Olivier Smith, Jim Baker can the "Categories of Conformance" section be updated to match the diagrams (or vice versa)?

Trevor Lovett

I do not have the source material for it. If someone has it, I can update it. If not, I can just remove it.

Jim Baker

Rabi produced this source matls. - I've requested the source and I'll attach it to the page for your editing pleasure when I get it.