This page is now not in use. Instead please refer to and add content to: PR #2118

- CNTT Hybrid Multi-Cloud Architecture (includes Edge)

- CNTT Edge Architecture

Topic Areas:

(RM Chapter 3 new section on Edge Computing w/o OpenStack specifics)

Edge deployment scenarios

Cloud Infrastructure (CI) deployment environment for different edge deployments:

Controlled: Indoors, Protected, and Restricted environments. Data Centers, Central Offices, Indoor venues. Operational benefits for installation and maintenance, and reduced need for hardening/ruggedized.

Exposed: Outdoors, Exposed, Harsh and Unprotected environments. Expensive rugged equipment

Cloud Infrastructure (CI) hardware type for different edge deployments:

Commodity/Standard: COTS, standard hardware designs and form factors. Deployed only in Controlled environments. Reduced operational complexity.

Custom/Specialised: non-standard hardware designs including specialised components, ruggetised for harsh environments and different form factors. Deployed in Controlled and/or Exposed environments. Operationally complex environment.

Cloud Infrastructure (CI) hardware specifications for different edge deployments:

CNTT Basic: General Purpose CPU; Standard Design.

CNTT Network Intensive: CNTT Basic + high speed user plane (low latency, high throughput); Standard Design.

CNTT Network Intensive+ : CNTT Network Intensive + optional hardware acceleration (compared with software acceleration can result in lower power use and smaller physical size); possible Custom Design.

CNTT Network Intensive++ : CNTT Network Intensive + required hardware acceleration; Custom Design.

Server capabilities for different edge deployments and the OpenStack services that run on these servers; the OpenStack services are containerised to save resources, intrinsic availability and autoscaling:

Control nodes host the OpenStack control plane components (subset of Cloud Controller Services), and needs certain capabilities:

OpenStack services: Identity (keystone), Image (glance), Placement, Compute (nova), Networking (neutron) with ML2 plug-in

Message Queue, Database server

Network Interfaces: management, provider and overlay

Compute nodes host a subset of the Compute Node Services:

Hypervisor

OpenStack Compute nova-compute (creating/deleting instances)

OpenStack Networking neutron-l2-agent, VXLAN, metadata agent, and any dependencies

Network Interfaces: management, provider and overlay

Local Ephemeral Storage

Storage Nodes host the cinder-volume service. Storage nodes are optional and required only for some specific Edge deployments that need large persistent storage:

Block storage cinder-volume

Storage devices specific cinder volume drivers

Cloud partitioning: Host Aggregates, Availability Zones

OpenStack Edge Reference Architecture provides more depth and details

Edge Deployments:

Small footprint edge device: only networking agents

Single server: deploy multiple (one or more) Compute nodes

Single server: single Controller and multiple (one or more) Compute nodes

HA at edge (at least 2 edge servers): Multiple Controller and multiple Compute nodes

SDN Networking support on Edge

(RM Potential Ch 2 as a specialised workoad type)

Network Function as a Service (NFaaS)

Higher level services such as Network Functions (includes composition of Network Functions to form higher level services) offered on Telco and other clouds (HCP, specialised, etc.). While here the discussion is about NFaaS, this is equally applicable to anything as a service (XaaS)

- NFaaS offered on one or more Cloud Services (Telco, HCP, others) including at the Edge

- Network integration and Service Chaining

- Security Considerations including delegated User Authentication & Authorization

- Commercial arrangements including User Management

(RM Ch03 as a sub-section of Introduction)

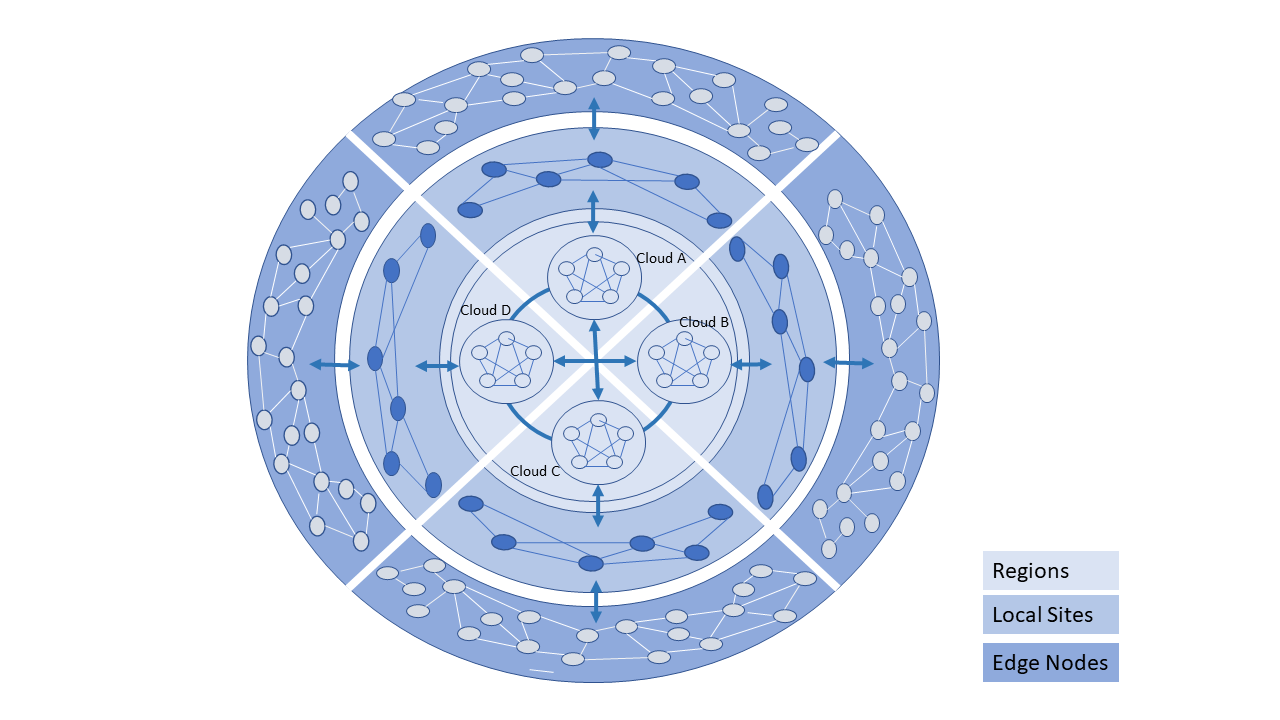

Hybrid Multi-Cloud Enabled Edge Architecture

(PG: In the above diagram, replace "Local" with "Metro")

- The Telco Operator may own and/or have partnerships and network connections to utilize multiple Clouds

- for network services, IT workloads, external subscribers

- On Prem Private

- Open source; Operator or Vendor deployed and managed | OpenStack or Kubernetes based

- Vendor developed; Operator or Vendor deployed and managed | Examples: Azure on Prem, VMWare, Packet, Nokia, Ericsson, etc.

- On Prem Public: Commercial Cloud service hosted at Operator location but for both Operator and Public use | Example: AWS Wavelength

- Outsourced Private: hosting outsourced; hosting can be at a Commercial Cloud Service | Examples: Equinix, AWS, etc.

- (Outsourced) Public: Commercial Cloud Service | Examples: AWS, Azure, VMWare, etc.

- Multiple different Clouds can be co-located in the same physical location and may share some of the physical infrastructure (for example, racks)

| Type | System Developer | System Maintenance | System Operated & Managed by | Location where Deployed | Primary Resource Consumption Models |

|---|---|---|---|---|---|

| Private (Internal Users) | Open Source | Self/Vendor | Self/Vendor | On Prem | Reserved, Dedicated |

| Private | Vendor | HCP | Self/Vendor | Self/Vendor | On Prem | Reserved, Dedicated |

| Public | Vendor | HCP | Self/Vendor | Self/Vendor | On Prem | Reserved, On Demand |

| Private | HCP | Vendor | Vendor | Vendor Locations | Reserved, Dedicated |

| Public (All Users) | HCP | Vendor | Vendor | Vendor Locations | On Demand, Reserved |

- Each Telco Cloud consists of multiple interconnected Regions

- A Telco Cloud Region may connect to multiple regions of another Telco Cloud (large capacity networks)

- A Telco Cloud also consists of interconnected local sites (multiple possible scenarios)

- A Telco Cloud's local site may connect to multiple Regions within that Telco Cloud or another Telco Cloud

- A Telco Cloud also consists of a large number of interconnected edge nodes

- Edge nodes may be impermanent

- A Telco Cloud's Edge node may connect to multiple local sites within that Telco Cloud or another Telco Cloud; an Edge node may rarely connect to an Telco Cloud Region

(RM Ch03 the new Edge Section)

Comparison of Edge terms from various Open Source Efforts

| Characteristics | Other Terms | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CNTT Term? | Compute | Storage | Networking | RTT* | Security | Scalability | Elasticity | Resiliency | Preferred Workload Architecture | Upgrades | OpenStack | OPNFV Edge | Edge Glossary | GSMA | |

Regional Data Center (DC) Fixed | 1000's Standardised >1 CPU >20 cores/CPU | 10's EB Standardised HDD and NVMe Permanence | >100 Gbps Standardised | ~100 ms | Highly Secure | Horizontal and unlimited scaling | Rapid spin up and down | Infrastructure architected for resiliency Redundancy for FT and HA | Microservices based Stateless Hosted on Containers | HW Refresh: ? Firmware: When required Platform SW: CD | Central Data Center | ||||

Metro Data Centers Fixed | 10's to 100's Standardised >1 CPU >20 cores/CPU | 100's PB Standardised NVMe on PCIe Permanence | > 100 Gbps Standardised | ~10 ms | Highly Secure | Horizontal but limited scaling | Rapid spin up and down | Infrastructure architected for some level of resiliency Redundancy for limited FT and HA | Microservices based Stateless Hosted on Containers | HW Refresh: ? Firmware: When required Platform SW: CD | Edge Site | Large Edge | Aggregation Edge | ||

Edge Fixed / Mobile | 10's Some Variability >=1 CPU >10 cores/CPU | 100 TB Standardised NVMe on PCIe Permanence / Ephemeral | 50 Gbps Standardised | ~5 ms | Low Level of Trust | Horizontal but highly constrained scaling, if any | Rapid spin up (when possible) and down | Applications designed for resiliency against infra failures No or highly limited redundancy | Microservices based Stateless Hosted on Containers | HW Refresh: ? Firmware: When required Platform SW: CD | Far Edge Site | Medium Edge | Access Edge / Aggregation Edge | ||

Mini-/Micro-Edge Mobile / Fixed | 1's High Variability Harsh Environments 1 CPU >2 cores/CPU | 10's GB NVMe Ephemeral Caching | 10 Gbps Connectivity not Guaranteed | <2 ms Located in network proximity of EUD/IoT | Untrusted | Limited Vertical Scaling (resizing) | Constrained | Applications designed for resiliency against infra failures No or highly limited redundancy | Microservices based or monolithic Stateless or Stateful Hosted on Containers or VMs Subject to QoS, adaptive to resource availability, viz. reduce resource consumption as they saturate | HW Refresh: ? Firmware: ? Platform SW: ? | Fog Computing (Mostly deprecated terminology) Extreme Edge Far Edge | Small Edge | Access Edge | ||

*RTT: Round Trip Times

EUD: End User Devices

IoT: Internet of Things

11 Comments

Beth Cohen

Great start!

Beth Cohen

Notes from Sept 8 discussion. We need to capture the outline here, then move it into the Github docs repository.

CI Deployment environment for edge. Difference between between controlled environments (Data centers with conditioning) and uncontrolled environment where they might or be outside or in otherwise extreme conditions. Aspects to consider could be lack of physical security, extreme temperatures, electro-magnetic interference (hospitals, etc.) The term for exposed is harsh environments. Need to change the doc above.

Cloud Infrastructure (CI) hardware design – Edge hardware is more important than in Core HW. More dependent on HW. Intel FlexRAN for Edge. Other companies have "edge" equipment – Dell, Lenovo, SuperMicro. What makes the Edge equipment special? Is that needed to deploy and Edge infrastructure. Much of it is ruggetized for harsh environments.

Server section - Add storage node for Edge. More important than we originally thought. Typically the OS deployment has a small footprint. What is the minimum number of components you need to run OS at the edge? Can we run it without network? How about storage? Need compute at the very least. Looking at edge requirements within RA1- Chap 3-4. 2 or 3 servers at edge for HA. 3 is ideal. Two for failover is the minimum. Single server, with multiple compute nodes. All the Edge OS implementations are containerized to safe resources on the edge HW. OpenShift, ADVA has a single server OS management tool. StarlingX supports that approach as well.

Cyberg can be extended to the Edge, Ussurn (OS release after Train) supports FPGA from the beginning. Nova does not support FPGA natively. Cisco supports it, but they add proprietary secret sauce to make it work. Need to address the need for OS native support for FPGA. China Mobile is also using it, but they wrote extensions to do it.

Pankaj Goyal

Do we need to mention Central Office, Edge and Far Edge?

How about the different types of clouds:

Onsite Public: Public cloud hosted at Company location (but for Public use)

Rabi Abdel

it might make more sense to focus on consumption model (reserved, on-demand, etc), operating model (managed vs self-managed), and SLA rather than public/private as the private/public split is becoming more blur and less relevant in my opinion.

Beth Cohen

Please define EnCloud in the doc. Also need to define multi-Cloud. Edge is still ill-defined in the marketplace and in the technology community, so it is important to state our assumptions and definitions for the purposes of the document. Should we start from the general and then drill down to the Telecom Edge Cloud, or go directly the Telecom specific requirements. Mehmet – Use a more general term, Edge Cloud for example, rather than pinning it to the enterprise. Edge Cloud always has the characteristics of having constraints, limited resources, limited bandwidth, intermittent connectivity, etc. Net to incorporate the concept of multi-cloud into this document. Multi-cloud is public/private, container vs. VM. hybrid architecture. The difference is who owns the customers, who owns and dictates the infrastructure. (AWS/VMWare partnership for example) Wavelength is hosted by the Telecom, but managed and owned by Amazon. It can get quite complex because of all the components and relationships. Multi-cloud is dependent on a federated relationship between operators, vendors, private clouds, and public cloud hyperscalers. Then the technical angle is that the components need to be orchestrated and the tools to do that have to be available. Most of the vendors are moving their business models to stand up and manage their clouds that sit on top of the hyperscalers' infrastructure. Most of the non-hyperscaler vendors (VMWare,HP, Ericsson, etc) don't have their own independent platforms anymore. Need to make sure that CNTT reference architectures can support these models. VNF/CNF tuning is separate from the tuning of the infrastructure. CNTT's mandate is to tune the infrastructure to support Telecom workloads.

Tomas Fredberg

We also talked about the nessesity to cover the use case where multiple different Clouds are co-located in the same physical Site.

Example of this could be

Tomas Fredberg

We also had a debate around if and how higher layers of Telco services would be integrated in the Multi-Cloud architecture e.g. NFaaS from any type of Vendor (Telco or HCP) offered cloud. This could be Network Function on any level all the way from UPF in the data plane all the way up to a complete Core or RAN.

We didn´t reach any conclusion if that needed to be included, but I think we should spend a few discussions on this topic to ensure the Multi-Cloud map becomes complete.

We did spend time on if these offered NFaaS needed to be CNTT compliant inside and at least I felt we had rather different views on this.

In my view it is completey up to the company and organization offering the Service how they like to implement and optimize their creation of the Service since non of that is visible outside of that organization. I would claim that CNTT has no play in prescribing how these offerings should be internally structured.

They are of course welcome to use the CNTT framefork if they like to avoid vendor lock-in in thier Service creation.

Rabi Abdel

In regards to the table above, it might make more sense to focus on consumption model (reserved, on-demand, etc), operating model (managed vs self-managed), and SLA rather than public/private as the private/public split is becoming more blur and less relevant in my opinion.

Pankaj Goyal

Rabi,

Thanks. Will add another column with the consumption model.

The Public/Private in the above table is only w.r.t. the user base – Private is for Operator's users only with Public for external paying customers only.

The operating model is split into 3 categories – IaaS system provider and maintainer, and OAM. Does that work?

Pankaj Goyal

vF2F October 15, 2020, Minutes: 2020-10-15 - CNTT Edge Work stream Working Session

Pankaj Goyal

From IEEE Edge 2020 (emphasis mine):

Human beings have been cooperating, competing, and entertaining ourselves by providing and consuming all kinds of services. We build, deploy, publish, and discover services, and with the current prevailing technology, we use clouds that are formed of computers and servers connected by the Internet, and developed using wire-based and wireless technology. Many enterprises and large organizations have begun to adopt small and mobile devices, sensors and actuators that provide data and services to users or other devices, and those devices will become more intelligent as technology progresses. We must deal with huge amount of data, big data that must be transferred from/to distant, sometimes very distant sources, stored and processed by data and computer clouds. As direct service links between clouds may not enable fast enough data access, reliability could be jeopardized, availability cannot be completely guaranteed, and the entire system is subject to security attacks, there is a need for significant future improvements. Due to the sizes of enterprises and large organizations, some smaller clouds, that could be stationary as well as mobile, are being proposed to improve these metrics. This is where the concept of Edge Computing, together with the necessary and possible intelligent management and control at the edge, comes to play.

“Edge Computing” is a process of building a distributed system in which some applications, as well as computation and storage services, are provided and managed by (i) central clouds and smart devices, the edge of networks in small proximity to mobile devices, sensors, and end users; and (ii) others are provided and managed by the center cloud and a set of small in-between local clouds supporting IoT at the edge. This dual architecture allows for Edge Computing. Edge Computing is practically a cloud-based middle layer between the center cloud and the edges, hardware and software that provide specialized services. The problem is how to execute such a process, how to manage the whole system, how to define and create Fogs and Edges, provide workflow, and how to provide Edge Computing (compute, storage, networking) services. Many open problems still exist, as they are not even properly specified, as the Edge Computing itself is not fully yet defined.