...

3.1 FD.IO

Editor: John DeNisco

(

...

DRAFT)

FD.io (Fast data – Input/Output) is a collection of several projects that support flexible, programmable packet processing services on a generic hardware platform. FD.io offers a landing site with multiple projects fostering innovations in software-based packet processing towards the creation of high-throughput, low-latency and resource-efficient IO services suitable to many architectures (x86, ARM, and PowerPC) and deployment environments (bare metal, VM, container).

...

FD.io’s Vector Packet Processor (VPP) is a fast, scalable layer 2-4 multi-platform network stack. It runs in Linux user space on multiple architectures including x86, ARM, and Power architectures.

Vector vs Scalar Processing

FD.io VPP is developed using vector packet processing, as opposed to scalar packet processing. Vector packet processing is a common approach among high performance packet

processing applications. The scalar based approach tends to be favored by network stacks that don't necessarily have strict performance requirements.

Scalar Packet Processing

A scalar packet processing network stack typically processes one packet at a time: an interrupt handling function takes a single packet from a Network

...

- When the code path length exceeds the size of the Microprocessor's instruction cache (I-cache) thrashing occurs, The Microprocessor is continually loading new instructions. In this model, each packet incurs an identical set of I-cache misses.

- The associated deep call stack will also add load-store-unit pressure as stack-locals fall out of the Microprocessor's Layer 1 Data Cache (D-cache).

Vector Packet Processing

In contrast, a vector packet processing network stack processes multiple packets at a time, called 'vectors of packets' or simply a 'vector'. An interrupt handling function takes the vector of packets from a Network Interface, and processes the vector through a set of functions: fooA calls fooB calls fooC and so on.

...

The further optimizations that this approaches enables are pipelining and prefetching to minimize read latency on table data and parallelize packet loads needed to process packets.

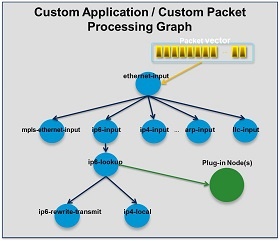

The Packet Processing Graph

At the core of the FD.io VPP design is the Packet Processing Graph. The FD.io VPP packet processing pipeline is decomposed into a ‘Packet Processing Graph’. This modular approach means that anyone can ‘plugin’ new graph nodes. This makes VPP easily extensible and means that plugins can be customized for specific purposes.

Graph Nodes

...

The Packet Processing Graph creates software:

- That is pluggable, easy to understand and extend

- Consists of a mature graph node architecture

- Allows for control to reorganize the pipeline

- Fast, plugins are equal citizens

A Packet Processing Graph:

At runtime, the FD.io VPP platform assembles a vector of packets from RX rings, typically up to 256 packets in a single vector. The packet processing graph is then applied, node by node (including plugins) to the entire packet vector. The received packets typically traverse the packet processing graph nodes in the vector, when the network processing represented by each graph node is applied to each packet in turn. Graph nodes are small and modular, and loosely coupled. This makes it easy to introduce new graph nodes and rewire existing graph nodes.

Plugins

Plugins are shared libraries and are loaded at runtime by FD.io VPP. FD.io VPP finds plugins by searching the plugin path for libraries, and then dynamically loads each one in turn on startup. A plugin can introduce new graph nodes or rearrange the packet processing graph. You can build a plugin completely independently of the FD.io VPP source tree, which means you can treat it as an independent component.

DPDK

Features

Layer 2

Layer 3

...