...

- Network Planning and Design: Generative AI for precise small cell placement, MIMO antennas, beamforming, and optimized backhaul connections.

- Self-Organizing Networks (SON): Harnessing AI-based algorithms for autonomous optimization and network resource management of network resources

- Shared Infrastructure: Leveraging 5G RAN infrastructure resources for training and inference, enhancing AI capabilities and network efficiency.

- Network Protocol Code Generation for Network Protocols: Enabling co-pilot functionality for generating network protocol software implementations of network protocols

- Capacity Forecasting: Utilizing AI to optimize network capacity and to avoid unnecessary upgrades or poor network performance from due to overloaded nodes.circuits

Key drivers for AI/ML for Operations:

...

0.5 page

At their heartscore, telecoms are technology companies driven by the need to scale their networks to service millions of users, reliably, transparently and efficiently. To achieve these ambitious goals, they need to optimize their networks by incorporating the latest technologies to feed the connected world's insatiable appetite for ever more bandwidth. To do this efficiently, the networks themselves need to become more intelligent. At the end of 2021, a bit over 2.5 years ago, LFN published its first white paper on the state of intelligent networking in the telecom industry. Based on a survey of over 70 of its telecom community members, the findings pointed to a still nascent field made up of mostly research projects and lab experiments, with a few operational deployments related to automation and faster ticket resolutions. The survey did highlight the keen interest of its respondents had in intelligent networking, machine learning and its promise for the future of the telecom industry in general.

Fast forward to 2023 when at the request of the LFN Governing Board and Strategic Planning Committee, the LFN AI Taskforce was created to coordinate and focus the efforts that were already starting to bubble up in both new project initiatives (Nephio, Network Super Blueprints, just to name a few) and within existing projects (Anuket, ONAP). The Taskforce was given the charter by the Governing Board to look at and make recommendations on what direction LFN should go for the Governing Board. Some of the areas that the Taskforce looked into included:

...

Ironically, as generative AI and LLM adoption becomes widespread in many industries, telecom has lagged somewhat due to a number of valid factors. As was covered in the previous white paper, the overall industry challenges remain the same, that is the constant pressure to increase the efficiency and capacity of operators’ infrastructures to delivery more services to customers for lower operational costs. The complexity and lack of a common standard understanding of network traffic data remains a barrier for the industry to speed the adoption of AI/ML to optimize network service delivery. Some of the challenges that are motivating continued research and adoption of intelligent networking in the industry include:

...

- Need for High Quality Structured Data: Communication Telecommunications networks are very different from general other AI human-computer data sets, in that a large number of interactions between systems use structured data. However, due to the complexity of network systems and differences in vendor implementations, the degree of standardization of these structured data is currently very low, causing "information islands" that cannot be uniformly "interpreted" across the systems. There is a lack of "standard bridge" to establish correlation between them, and it cannot be used as effective input to incubate modern data-driven AI/ML applications. Large language models can be used to understand large amounts of unstructured operation and maintenance data (for example, system logs, operation and maintenance work orders, operation guides, company documents, etc., which are traditionally used in human-computer interaction or human-to-human collaboration scenarios), from which effective knowledge is extracted to provide guidance for further automatic/intelligent operation and maintenance, thereby effectively expanding the scope of the application of autonomous mechanism.

- AI Trustworthiness: In order to meet carrier-level reliability requirements, network operation management needs to be strict, precise, and prudent. Although AI Trustworthiness: In order to meet carrier-level reliability requirements, network operation management needs to be strict, precise, and prudent. Although operators have introduced various automation methods in the process of building autonomous networks, organizations with real people are still responsible for ensuring the quality of communication and network services. In other words, AI is still assisting people and is not advanced enough to replace people. Not only because the loss caused by the AI algorithm itself cannot be defined as the responsible party (the developer or the user of the algorithm), but also because the deep learning models based on the AI/ML algorithms themselves are based on mathematical statistical characteristics, resulting in behavior uncertainty leading to the credibility of the results being difficult to determine.

- Uneconomic Margin Costs: According to the analysis and research in the Intelligent Networking, AI and Machine Learning While Paper , there are a large number of potential network AI technology application scenarios, but to independently build a customized data-driven AI/ML model for each specific scenario, is uneconomical and hence unsustainable, both for research and operations. Determining how to build an effective business model between basic computing power providers, general AI/ML capability providers and algorithm application consumers is an essential prerequisite for its effective application in the field.

- Unsupportable Research Models: Compared with traditional data-driven dedicated AI/ML models in specific scenarios, the R&D and operation of large language models have higher requirements for pre-training data scales, training computing power cluster scale, fine-tuning engineering and other resource requirements, energy consumption, management and maintenance, etc. Is it possible to build a shared research and development application, operation and maintenance model for the telecommunications industry so that it can become a stepping stone for operators to realize high-end autonomous networks.

- Contextual data sets: Another hurtle that is often overlooked is the need for the networking data sets to be understood in context. What that means is that networks need to work with all the layers of the IT stack, including but not limited to:

- Applications: Making sure that customer applications perform as expected withe underlying network

- Security: More important than ever as attack vector expand and customers expect the networks to be protected

- Interoperability: The data sets must support transparent interoperability with other operators, cloud providers and other systems in the telecom ecosystem

- OSS/BSS Systems: The operational and business applications that support network services

...

Data Privacy and Security: The ability to monetization the data comes with a big caveat, which is that the use of customer data must be handles with care to ensure data privacy, security and regulatory compliance. This requires clear policies and security procedures to ensure anonymity, safety and privacy at all times.

...

Simplification of complex data models: Large language models can be used to understand large amounts of unstructured operation and maintenance data (for example, system logs, operation and maintenance work orders, operation guides, company documents, etc., which are traditionally used in human-computer interaction or human-to-human collaboration scenarios), from which effective knowledge is extracted to provide guidance for further automatic/intelligent operation and maintenance, thereby effectively expanding the scope of the application of autonomous mechanism.

Brief Overview of AI Research in Context

Lingli DengAndrei Agapi Sandeep Panesar

...

This breakthrough in AI research is characterized by vast sizeamounts of easily accessible data, extensive training datamodels, and the ability to generate human-like text. These models are trained on large enormous datasets from sources particular to the subject area of research desired. LLMs have changed the way natural language processing tasks are interpreted and . Some of the areas that have been particularly fruitful include: text generation, language translation, summarization, and automated question answeringresponses.

Generative AI (Gen AI)

Gen AI is a much broader category of artificial intelligence systems capable of generating new content, ideas, or solutions autonomously based on a human text based input. This includes LLMs as resource data for content generation. As such, Gen AI is seems to be able to produce creatively as human would do so in real life, but human-like creative content in a fraction of the time. Content creation for web sites, images, videos, and music are a few of the capabilities of Gen AI. The rise of Gen AI provides numerous business cases for use , from creating corporate logos, to corporate videos, to saleable products to end-consumers and businesses, to creating visual network maps on the basis of the datasets being accessed. Further, even being able to provide optimized maps for implementation to improve networking either autonomous or with human intervention.

...

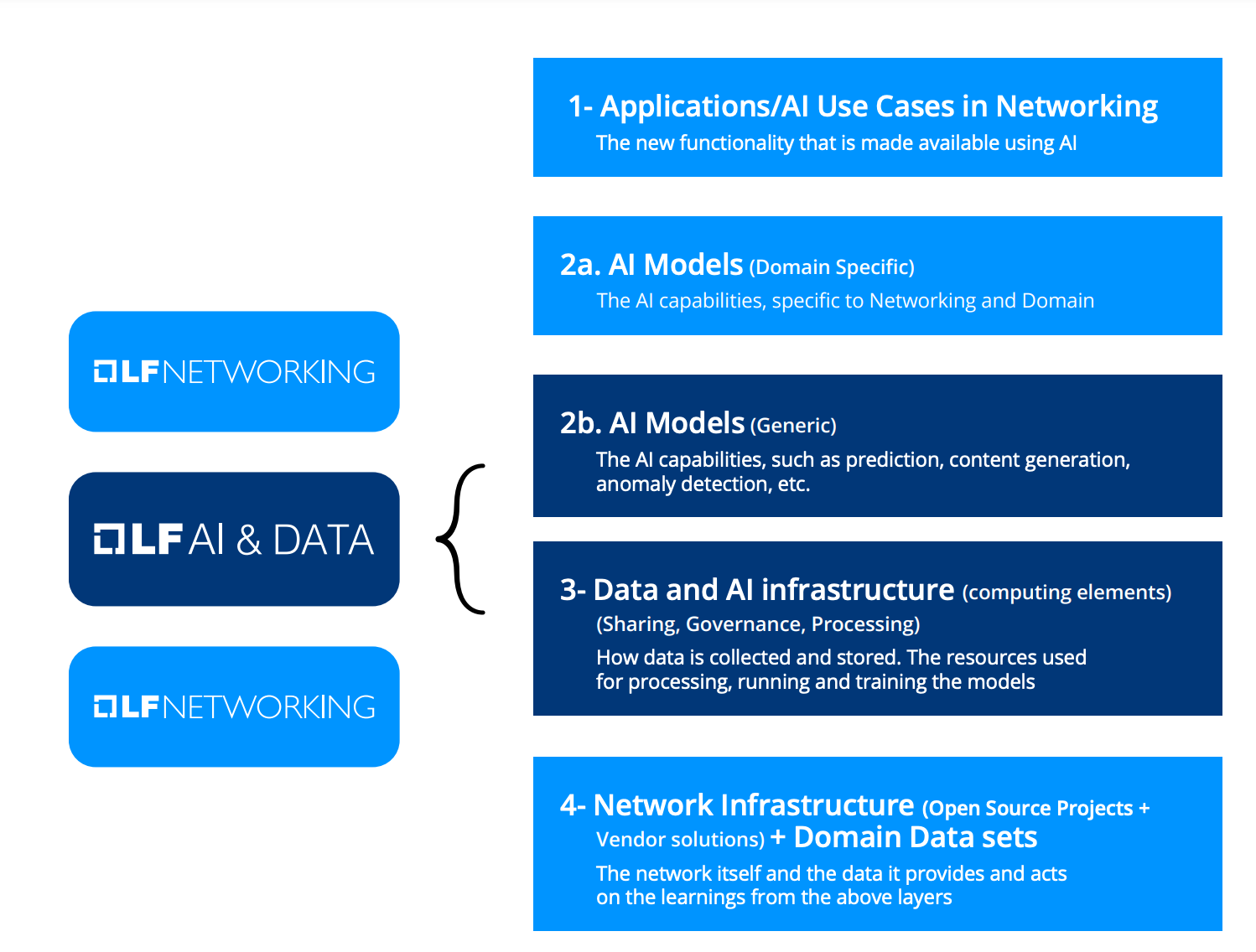

When considering the role of open source software in addressing the challenges of Network AI it is important to understand the current landscape of projects and initiatives and how they came into existence. Several such initiatives have already laid down the ground work for building Network AI solutions, or are actively working on creating them. Building on these foundations, it is possible to envision what role open source software will play in unleashing the power of AI for the future generations of networks. Some of the required technologies required of Network AI are unique to the Networking industry, and will have to be addressed by the existing OSS projects on the landscape, or by creation of additional ones. Some of the other pieces of technology are more generic, and will come from the broad landscape of AI OSS. Here is a rough outline of the different layers of Networking AI and the source of the required technology:

Related Open Source Landscape

1-2 pages

Successful In addition, successful development of AI models for use in Networking relies on the availability of data that could be shared under a common license. The Linux Foundation created the Community Data License Agreement (CDLA) for this purpose. Using this license, end users can share data and make it available for researches, who in turn develop the necessary models and applications that benefit the end users. In addition to providing a legal framework for sharing research and innovation, Open Source provides a forum for creating communities with shared purpose

Related Open Source Landscape

1-2 pages

The legal and technical framework for sharing research is important, but what is really essential for driving innovations are the ability for Open Source to provide a forum for creating communities with shared purpose. These communities by allowing people from various companies, diverse skill levels and different cultural perspectives, have the potential to spark real innovation in ways that are not really possible in any other context.

- Network communities

Open Source Software (OSS) communities have been successfully building projects that provide the building blocks of networks for over decade now. OSS projects provide the underlying technology for all layers of the network, including the data/forwarding plane, control plane, management and orchestration. A vibrant ecosystem of contributing companies exists around these projects, consisting of organizations that realized the value in the principles of OSS for speeding the development of networking technology:

- Shared effort to develop the foundation layers of the technology, freeing up more resources to develop the value-add added layers

- A neutral platform for innovation where individuals and organizations can exchange ideas and come up with develop best of breed technology solutions

- An opportunity to demonstrate thought leadership and domain expertise

- A collaboration space where producers and consumers of technology can freely interact and to create new business opportunities

Many of the networking OSS projects are hosted by the Linux Foundation. Most of them are part of LF Networking, LF Connectivity and LF Broadband.

...

The same principles are now being applied to the shared development of Network AI technologies, where the open source community fosters innovation and potentially stimulates business growth. AI innovation has been strongly propelled by OSS projects that were initiated following the same principles mentioned aboveof open collaboration. It is hard to imagine doing any modern AI development without heavily relying on OSS. OSS AI and ML projects range from anything from the frameworks for development to libraries and programming tools. OSS work means that data scientists who develop domain specific models such as networking and telecommunications, can focus on innovation by leveraging OSS projects to jump start their work. It would be almost impossible to mention all the relevant OSS AI projects here as there are already so many, and the list only keeps growing. The Linux Foundation AI & Data maintains a useful dynamic landscape here.

- Open AI models

There When it comes to the popular subject of LLMs, there is a lot of debating going on currently about debate on the definition of what an "Open AI model" really means. While it is out of the scope of this paper to try and settle any of those debates, it is obvious that there is a clear need to create the definition of "open LLM". The sooner such definition is created and blessed by the industry, the faster innovation can happen.

In the area of open source LLMs, both with respect to generative models [11] as well as more specialized, discriminative models such as text classifiers, QA, summarisationsummarization, and text embedding models [12], has been particularly vibrant and have evolved rapidly evolving over the past 5 years. A number of global platforms are being widely used for sharing open models, code, datasets and accompanying research papers, have been particularly instrumental in democratizing access to cutting edge technologies and fostering an environment of global collaboration. Among these platforms, Huggingface [30] has played a particularly pivotal role. At the time of this writing, HuggingFace hosts [31] over 350K models, 75K datasets and 150K demo apps (Spaces), in more than 100 languages. It also maintains Transformers, a popular open source library that facilitates integrating, modifying and performing downstream task adaptation for thousands of foundation models from this vast repository. It also provides the Datasets library, as well as several widely used benchmarks and leaderboards [11][12] that are very instrumental for researchers and developers implementing LLM solutions. Other important platforms used by the AI/ML open source community in general (not necessarily LLM-focused) are Kaggle [32] (used for public datasets and high profile ML competitions in all areas) and Paperswithcode [33] (this platform links academic research papers to their respective code and implementation, as well as providing benchmarks and leaderboards comparing different competing solutions for a wide area of ML tasks).

...

In recent years The Linux Foundation Networking launched a set of Open Source Networking "Super Blueprints" that outline architectures for common networking use cases and are built using OSS technology. Those blueprints consists of collections of OSS projects and commercial products, integrated together by the open source community and documented for free use by any interested party. Several of these blueprints started incorporating AI technologies and we expect that trend to accelerate, as existing blueprints inspire additional AI-driven solutions. One promising area is intent-based network automation. There is current work on blueprints that use NLP and LLM to translate user intent, expressed in natural spoken language to network requirements, and generate full network configuration based on those requirements. Such approach can significantly simplify existing network operation processes, and enable new services that can be directly consumed by the end users in an automated manner (, commonly known as Network-as-a-Service - (NaaS).

- Verification programs

Experience with OSS in other domains shows that whenever there is an OSS technology that powers commercial products or offerings, there is a need to validate the products to make sure they are properly using the OSS technology and are ready to serve the end users in a predictable manner. Such validation/verification programs have existed for a while as part of OSS ecosystems. They are often created and maintained by the same OSS community that develops the OSS projects themselves. The Cloud Native Computing Foundation (CNCF) has a successful "Certified Kubernetes" program that helps vendors and end users ensure that Kubernetes distributions provide all the necessary APIs and functionality. A similar approach should be applied to any OSS Networking AI projects. End users should have a certain level of confidence, knowing that the OSS based AI Networking solution they use will behave as expected.

...